Cloud Contact Center Implementation in Healthcare: A Leadership and Systems Engineering Perspective

Abstract

Healthcare contact centers are undergoing a structured transition as health systems move from legacy telephony to cloud-based, AI-enabled omnichannel platforms. These platforms increasingly function as centralized digital access hubs for scheduling, triage, navigation, and patient communication. First, the study shall have a leadership survey that captures perspectives from six senior leaders across Digital Products, Experience Analytics, Patient Access, Digital Programs, Data Engineering, and Platform Engineering. Second, thematic analysis will be applied to interpret these perspectives and identify recurring patterns related to technology adoption, organizational readiness, and systems-engineering awareness. Third, an executive-level systematic literature review on cloud contact centers, digital access, AI adoption, and systems engineering shall further contextualize leadership insights and identify evidence-based enablers and barriers. Finally, twelve use cases will be developed with five strategic clusters which mirror how leaders naturally conceptualize outcomes, capabilities, and cross-functional dependencies.

The study identified clear patterns across strategic goals, operational Key Performance Indicators (KPIs), Artificial Intelligence (AI) adoption practices, data readiness, governance maturity, and systems-engineering gaps. Each domain contributed inputs that informed the next, producing aligned outputs that revealed where organizations succeed and where technical or governance limitations persist. Findings indicate that modernizing cloud contact centers is not an isolated IT upgrade but an enterprise-wide systems challenge requiring coordinated architecture, unified governance, and cross-functional collaboration across engineering, analytics, clinical access, and digital operations.

1. Introduction

Healthcare consumer expectations have shifted as patients increasingly seek convenience, flexibility, and engagement across multiple communication channels. These trends mirror consumer industries; however, healthcare introduces additional constraints related to regulation, clinical risk, and fragmented operational workflows. While digital access is becoming essential, current academic literature offers limited analysis of cloud-based healthcare contact centers, few leadership-driven models for access modernization, and little application of systems-engineering frameworks to guide enterprise transformation. This gap is significant, as access friction, workflow fragmentation, and inconsistent communication remain common across health systems.

In response to rising demand, organizations have expanded the digital front door, integrating tools for provider search, scheduling, registration, communication, and record access (Fisher, 2023). Evidence suggests that digital access improves convenience and satisfaction, particularly within virtual care pathways. Ivanova et al. (2024) observed increased telemedicine awareness and use between 2017 and 2022, reporting comparable satisfaction with in-person visits and improved logistical efficiency. However, inequities persist: lower-income patients express willingness to use digital tools but report lower comfort, while continuity with a known provider enhances trust and usability.

Digital access also supports underserved populations. Shigekawa et al. (2023) found that Federally Qualified Health Center patients report high satisfaction with telehealth, and audio-only visits remain essential for older adults and individuals without reliable broadband or devices. As digital pathways expand, traditional phone-based call centers alone cannot support increasing complexity. Cloud contact centers enable omnichannel engagement, intelligent routing, and CRM/EHR integration, creating scalable and coordinated patient navigation (AHA, 2024). Case studies from Tampa General Hospital and Yale New Haven Health demonstrate how centralized experience centers and strong governance can reduce friction, expand access, and improve operational performance.

These platforms also function as critical components of health equity, influencing who reaches care, how quickly support is delivered, and how communication is tailored across languages and modalities. Consequently, cloud contact centers shape patient experience, operational efficiency, and long-term system sustainability, underscoring the importance of leadership perspectives in understanding this transformation.

Current-State Challenges in Healthcare Access

The digital front door encompasses interactions across web, mobile, chat, SMS, patient portals, and telehealth (Fisher, 2023). Research consistently demonstrates that digital-first access reduces friction and improves patient satisfaction (AHA, 2024; Ivanova et al., 2024). Telemedicine studies further indicate that strong digital entry points particularly benefit rural patients, individuals with chronic disease, and those with mobility limitations (Shigekawa et al., 2023).

Cloud contact centers integrate voice, SMS, email, chat, mobile app interactions, and CRM-driven workflows with real-time routing and automation (Lauren Wallace, 2023). Omnichannel models adapted from retail require healthcare-specific governance, interoperability, and privacy oversight to operate safely and reliably (de Oliveira et al., 2023). These models also improve scalability and elasticity during demand surges, such as seasonal fluctuations or public health emergencies (AHA, 2024).

Equity, Access, and Strategic Importance

Digital tools offer flexible communication modalities that can reduce disparities (Shigekawa et al., 2023). However, risks persist due to gaps in digital literacy, language barriers, and limited broadband access. Because cloud contact centers directly influence who reaches care and the level of navigation provided, they function as a central mechanism for equitable access (AHA, 2024).

Problem, Purpose, and Contributions

Despite increased investment in cloud modernization, many organizations deploy solutions without fully addressing systems-engineering principles, data governance requirements, workflow redesign, or cross-functional leadership alignment. Academic literature on healthcare-specific contact centers remains limited, and equity studies often overlook patients who never reach care due to access friction.

This study integrates leadership insights with evidence on cloud modernization, AI adoption, and access transformation. The contributions include:

- A cross-domain leadership perspective, aligned with findings from Meri et al. (2023) and Stoumpos et al. (2023), emphasizing organizational structure, readiness, and alignment.

- An executive-oriented review of enablers and barriers, grounded in Hu & Bai (2014), Sachdeva et al. (2024), and Meri et al. (2023).

- A thematic analysis to identify patterns supporting use-case development in Braun, V. and Clarke, V. (2006).

- A leadership-focused systematic review revealing gaps between executive expectations and implementation complexity, reflecting concerns discussed by Stoumpos et al. (2023) and Sachdeva et al. (2024).

- A use-case architecture with KPIs, drawing on frameworks from Romero et al. (2016) and Padala (2025).

Taken together, this study examines how leaders conceptualize cloud contact center modernization, why it is essential for access and equity, how organizational capabilities shape implementation outcomes, and where systems-engineering gaps persist. This synthesis addresses a critical gap in the literature by positioning cloud contact centers as enterprise-wide orchestrators of patient access.

This article is intended for healthcare executives, patient access leaders, digital and IT leaders, analytics and data engineering leaders, and clinical operations stakeholders responsible for access strategy and infrastructure. By integrating leadership perspectives with systems-engineering principles and empirical evidence, the study frames cloud contact center modernization as an enterprise transformation challenge requiring coordinated governance, architectural alignment, and cross-functional leadership, not merely a technology upgrade.

2. Method: Leadership Survey, Thematic Analysis, and Systematic Review at an Executive Level

This study employed a qualitative, exploratory design integrating three coordinated components: (1) a structured leadership questionnaire, (2) a lightweight thematic analysis informed by Braun and Clarke’s (2006) methodology, and (3) an executive-level systematic review of literature on cloud contact centers, digital access, AI adoption, and systems engineering. Because the objective was to understand how senior leaders conceptualize cloud modernization within a large health system, expert-level goal-directed sampling was used, appropriate for depth-oriented qualitative inquiry. The limited sample size reflects the small number of individuals with enterprise-wide responsibility for digital access, cloud infrastructure, analytics, and patient experience, whose roles situate their input within a systems-level view of modernization. This study captured leadership perspectives on organizational systems and did not collect patient data; therefore, it met criteria for Institutional Review Board (IRB) exemption.

2.1 Sample and Data Collection

Six senior healthcare leaders participated in a structured qualitative questionnaire examining their responsibilities and expectations related to Artificial Intelligence (AI), Machine Learning (ML), software ecosystems, cloud technologies, and systems-engineering principles. Participants represented key organizational subsystems, including patient access, digital product development, marketing and experience analytics, data engineering, and digital program management. A healthcare platform engineer conducted a technical review intended to function as a verification step, providing leadership with an engineering-grounded perspective.

Leader Roles

Associate Vice President of Patient Access – responsible for systemwide scheduling, registration, insurance verification, call center operations, and overall front-end performance optimization.

Associate Vice President of Digital Products – leads consumer-facing digital strategy and aligns modernization initiatives with organizational objectives.

Senior Director of Marketing & Experience Analytics – oversees enterprise analytics operations and KPI frameworks supporting experience-driven decision-making.

Senior Manager of Data Engineering – directs cloud-based data pipeline architecture, ETL processes, and scalable analytical infrastructure.

Senior Director of Digital Programs – manages enterprise CRM platforms, telephony systems, and multi-channel messaging integrations.

Platform Engineer (technical review) – provided cross-disciplinary interpretation of engineering considerations.

Questionnaire Structure.

The questionnaire began with items defining role, scope, and organizational responsibility. Subsequent items examined how leaders interact with AI/ML models, cloud architectures, and software ecosystems. Leaders were prompted to reflect on performance indicators, governance structures, funding considerations, and how technology supports value creation for internal and external stakeholders.

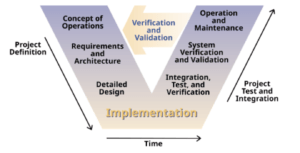

A final section assessed leaders’ familiarity with systems-engineering principles, particularly the V-Model in which an image of the V-Model from Kossiakoff et al (3rd Edition, 2020) was provided in the questionnaire. The AI-prompted engineering review was excluded from this assessment. The full questionnaire is provided in Appendix I.

2.2 Leadership Survey Design Overview

The leadership survey was structured to elicit senior leaders’ descriptions of responsibilities, technology touchpoints, performance measures, and governance mechanisms related to cloud modernization and AI-enabled systems. Survey items were organized into seven response domains reflecting recurring categories identified during instrument development and early familiarization with leadership roles. These domains functioned as data-collection strata, not analytical conclusions, and informed subsequent coding and thematic organization.

- Expectations of Cloud Technologies.

Responses related to cloud technologies were captured as descriptions of functional expectations and architectural characteristics. Leaders referenced scalability, interoperability, deployment speed, integration reliability, and security controls in relation to patient access workflows, analytics environments, and enterprise data delivery. Statements within this domain were treated as input descriptors reflecting how leaders articulated cloud-related requirements, constraints, and operational considerations. No evaluative judgments or outcome claims were derived at this stage.

The platform engineering review supplemented this domain by identifying technical elements referenced implicitly or explicitly in leadership responses, including API-based integrations, event-driven architectures, availability considerations, security controls, and observability mechanisms. These elements were recorded as technical annotations used later to validate architectural feasibility, not as prescriptive standards.

- Roles and Expectations of AI.

AI-related responses were categorized according to described use contexts and functional roles within organizational workflows. Leaders referenced automation, prediction, personalization, routing, analytics augmentation, and development support. These statements were classified based on where AI was described as being applied (e.g., patient access operations, analytics workflows, software development) rather than why or with what effect.

Mentions of generative AI, embedded vendor features, and large language models were recorded as technology references, and governance-related considerations were retained as separate attributes rather than synthesized interpretations. AI was treated as a capability referenced across domains rather than a standalone system component.

- KPIs and Performance Expectations.

KPI-related responses were recorded as measurement categories and metric types used by leaders to monitor operational and digital performance. Metrics referenced included call volume, wait times, abandon rates, handle time, scheduling completion, engagement indicators, CSAT, NPS, and reliability measures. These were logged as measurement artifacts associated with specific subsystems (e.g., contact center operations, digital access, analytics).

Before–after comparisons and references to performance improvement were retained as descriptive statements without inferential weighting. Differences in KPI emphasis across leadership roles were preserved for later comparative analysis rather than resolved at this stage.

- Governance of Technology, AI, and Cloud Systems.

Governance-related responses were categorized into structural governance elements (intake processes, oversight bodies, role definitions) and control mechanisms (access controls, compliance frameworks, data stewardship). Regulatory references (e.g., HIPAA, SOC 2, HITRUST) were recorded as compliance touchpoints rather than evaluative benchmarks.

AI-specific governance concerns, including oversight, transparency, and risk management, were treated as governance attributes linked to system design considerations. No conclusions regarding adequacy, maturity, or alignment were drawn within this section.

- Data Readiness and Infrastructure Requirements.

Statements related to data readiness were classified according to data-layer characteristics (completeness, accuracy, consistency), integration dependencies (CRM, telephony, EHR, analytics), and infrastructure attributes (scalability, cloud-native tooling, pipeline reliability). Differences in emphasis across leadership roles were retained as categorical distinctions.

Descriptions of data challenges or prerequisites were recorded as input conditions for AI and automation use cases, without assessing sufficiency or impact at this stage.

- Systems Engineering Familiarity.

Responses concerning systems engineering were categorized based on exposure level and conceptual framing. Leaders’ descriptions of systems engineering as a lifecycle-oriented or requirements-driven approach were recorded without interpretation. The V-Model explanation included in the questionnaire served as a reference artifact; reactions to it were treated as usability and comprehension observations, not assessments of competence or effectiveness. This domain captured familiarity indicators only and did not attempt to infer readiness or capability. - Integrating the Platform Engineering Perspective.

The platform engineering contribution was treated as a contextual validation artifact rather than a primary data source. Its role was to document technical considerations referenced in leadership responses and to identify commonly recognized engineering controls relevant to cloud and AI-enabled systems.

The narrative generated through the AI prompt and validated by a practicing platform engineer was retained as a supplementary interpretive layer, explicitly separated from leadership data. Its function was to support later architectural mapping, not to synthesize leadership intent or meaning within Section 2.2.

2.3 Thematic Analysis Approach

A lightweight thematic analysis was conducted to interpret senior healthcare leaders’ perspectives and identify recurring patterns related to technology adoption, organizational readiness, and systems-engineering awareness. This analytic method functioned as an intermediate subsystem, linking qualitative insights from the leadership questionnaire with the conceptual and architectural frameworks derived from the executive-level systematic review (Kossiakoff et al. 2020, 5-15, 30-38). The approach was used for the study’s objective of understanding how leaders define AI use cases, architectural layers, and departmental responsibilities within a modern digital healthcare ecosystem.

Thematic analysis was selected because the dataset was moderate in size, the goal was pattern identification rather than theory generation, and the method was selected for flexible mapping of qualitative insights to systems-engineering constructs. Unlike grounded theory, the study did not aim to develop a novel social theory; instead, it required a structured and transparent method to translate leadership expectations into architectural requirements (Kossiakoff et al. 2020, 21-29, 30-38).

The analysis followed Braun and Clarke’s (2006) six-phase model, chosen for its flexibility and suitability for applied exploratory work. A lightweight implementation was appropriate given the modest dataset and operational focus. Manual coding was used, consistent with the authors’ guidance that thematic analysis does not require specialized software for manageable datasets with primarily descriptive aims. This approach was selected for close, engineering-style engagement with the data, which was necessary when mapping qualitative statements to systems-engineering constructs such as functional decomposition and architectural layering (Kossiakoff et al. 2020, 80-115).

Phase 1: Familiarization

Leadership questionnaire responses were reviewed repeatedly to understand organizational scope, strategic priorities, and technology expectations across patient access, digital product innovation, marketing analytics, data engineering, and digital program operations. Early memos highlighted repeated references to automation, integration challenges, cloud readiness, governance, and KPI frameworks. These early signals functioned as preliminary subsystem indicators that would later support the formation of thematic clusters.

Phase 2: Initial Coding

Initial descriptive codes were generated to capture meaningful statements in leaders’ responses. Examples include:

- AI-supported workflow automation

- Integration dependencies

- Data pipeline reliability

- Security expectations

- Business impact metrics

Coding was performed by a single analyst, introducing a potential interpretive limitation. To mitigate bias, codes were grounded in participants’ own language and analytic memos were used to separate direct observations from inferred interpretations. Additionally, the platform engineering review served as a technical verification step to confirm whether coded statements aligned with established engineering expectations and interoperability constraints.

Phase 3: Generating Initial Themes

Codes were organized into preliminary thematic clusters. Early clusters reflected leaders’ expectations of cloud technologies, roles of AI, priority KPIs, governance structures, data readiness, and familiarity with systems engineering. These clusters were compared against constructs identified in the systematic review, indicating consistency between leadership experience and scholarly research. At this stage, thematic clusters served as intermediate outputs feeding into the larger systems-level architecture of the study (Kossiakoff et al. 2020, 95–115, 145–155).

Phase 4: Reviewing Themes

Themes were reviewed to ensure coherence, internal consistency, and alignment with the full dataset. The review avoided defining themes too broadly which risks losing analytic specificity or too narrowly which could fragment related concepts. This iterative step ensured that each theme represented a distinct subsystem while remaining connected to the overall architectural purpose of the study. Themes were allowed to emerge naturally rather than being forced to align with preconceived architectural categories.

Phase 5: Refining and Naming Themes

Themes were refined to produce clear, usable analytic constructs for downstream systems-engineering integration. Examples include:

- Statements about data quality, ETL processes, API dependencies, and measurement fidelity were integrated into a broader Data Readiness theme, mapping onto architectural needs such as data-layer reliability and integration-layer orchestration.

- Comments about AI-enabled personalization, workflow automation, routing logic, and analytics feature development were synthesized into AI and Automation Use Cases, associated with application-layer functionalities.

- Governance-related responses informed a Governance and Risk Management theme, reflecting oversight, security, and responsible AI practices.

A core limitation of lightweight thematic analysis is its descriptive orientation; it does not infer causal pathways or system dependencies. These limitations were addressed by subsequently linking themes to systems-engineering tools such as functional decomposition and architectural layering which provided analytical structure beyond descriptive coding (Kossiakoff et al. 2020, 80–115).

Phase 6: Producing the Final Thematic Narrative

The final thematic structure was organized to integrate directly with the study’s conceptual and architectural frameworks. Themes were translated into system-level constructs that informed:

- The use-case architecture

- The alignment of leadership expectations with cloud and AI capabilities

- The identification of gaps in governance, data readiness, and engineering literacy

- The development of KPIs and performance-alignment models

Although thematic analysis originates from psychology, its structured, repeatable phases align well with systems engineering practices particularly for synthesizing narrative requirements, stakeholder needs, and architectural considerations (Kossiakoff et al. 2020, 17–29, 30–38). This process provides a transparent and traceable method for converting qualitative statements into formal inputs for system design, while acknowledging limitations related to dataset size, single-analyst coding, and descriptive scope. The following results in Section 3 provide the synthesized outputs of the thematic analysis and serve as inputs to the architectural framework presented in Section 4.

2.4 Systematic Review at an Executive Level

An executive systematic review approach was selected because the academic literature on cloud contact centers remains sparse and fragmented across domains such as digital access, AI, governance, and telehealth. A structured, reproducible review was necessary to synthesize evidence from adjacent fields and assess alignment between leadership expectations and established research. Unlike narrative reviews, this approach provides transparency, reduces selection bias, and enables traceable integration of evidence into the use-case framework and architectural model.

Although few peer-reviewed studies explicitly examine cloud contact centers in healthcare, related literature on digital front doors, telemedicine, conversational agents, automation, revenue cycle processes, data governance, and AI safety forms a coherent evidence base. These studies reinforce leadership insights by positioning cloud contact centers as enterprise access hubs supported by AI, automation, and integrated workflows. The literature also highlights persistent systems-level gaps, including uneven safety evaluation, non-standardized measurement methods, and limited analysis of workforce and change-management impacts. As a result, cloud contact center modernization emerges not as a technology procurement event but as a broader organizational transformation.

3. Results

3.1 Leadership Perspectives: Key Themes

Although leaders operated within distinct subsystems, their reflections consistently framed the cloud contact center as a strategic, enterprise-level capability rather than a narrow telephony enhancement. Leaders expressed aligned expectations regarding strategic objectives, AI/ML adoption, performance measurement, governance requirements, data readiness, and the application of systems-engineering principles. Together, these perspectives reflected a cohesive set of inputs supporting the study’s system-level interpretation. However, measurable outcomes were needed for leadership expectations as a perspective from experience analytics.

Experience analytics leadership provided pre–post implementation KPI comparisons illustrating measurable operational and digital access improvements following AI-enabled contact center enhancements (Appendix II). Over a six-month period, monthly inbound call volume decreased by 12%, average handle time declined from 6.0 to 4.8 minutes, and call abandonment fell from 12% to 8%. Concurrently, online scheduling starts increased by 40%, completed digital appointments rose by 50%, and CSAT scores improved from 4.1 to 4.4. While these results reflect organizational experience rather than controlled experimental design, they demonstrated the performance shifts leaders expect when cloud platforms, AI, and workflow redesign are aligned.

To provide a consolidated view of these multi-domain expectations, Table 1 summarized key enablers, risks, and leadership priorities across participating functional areas. These findings reflect practice-based, experiential insights derived from executive roles rather than experimental evaluation.

Table 1. Summary of Leadership Domains, Expectations, and Risks

| Leadership Domain | Primary Expectations | Key Risks / Barriers |

| Patient Access | Reduce friction, improve routing, shorten waits, support omnichannel continuity | Workforce readiness, inconsistent workflows, vendor overpromising |

| Digital Products | Seamless web/mobile/app integration; CRM-aligned experiences | Fragmented identity, API instability, privacy constraints |

| Marketing & Experience Analytics | Reliable KPIs, attribution clarity, measurement fidelity | Data quality gaps, inconsistent event tracking |

| Data Engineering | Scalable pipelines, real-time delivery, cloud-native tooling, observability | Fragile pipelines, dependency failures, unmet governance requirements |

| Digital Programs | Unified CRM + telephony + messaging orchestration; integration maturity | Complex change management, interoperability failures |

| Platform Engineering | Secure, resilient, event-driven architectures; HIPAA-aligned controls | Security/identity gaps, lack of architectural governance |

From Telephony to Enterprise Access Platforms

Leadership perspectives showed strong alignment in framing the cloud contact center as an enterprise system of access, experience, and operational flow. Although the domains differ in scope, each leader described expectations that contribute as inputs to a unified organizational objective.

- Patient Access leadership emphasized reducing friction at the “front door” through improved service levels, abandonment reduction, and accurate routing.

- Digital products and programs highlighted the need for omnichannel continuity spanning web, mobile, messaging, CRM, and scheduling systems.

- Analytics and data engineering viewed the contact center as a critical source of event data needed for personalization, routing intelligence, and capacity planning.

- Platform engineering reinforced the importance of secure, API-driven, event-based architectures matched to organizational risk and regulatory demands.

Across all subsystems, leaders believed the cloud contact center to be a data-driven access hub essential for digital transformation and enterprise coordination.

Perceived Role of AI and Machine Learning

Leaders described AI/ML as embedded capabilities rather than standalone tools, with each domain identifying how AI strengthens its operational outputs.

- Patient Access emphasized AI-supported routing, forecasting, and task automation.

- Digital and marketing leaders focused on personalization, intent detection, and channel orchestration.

- Data engineering highlighted LLM-enabled acceleration of coding and testing.

- Digital programs noted rapid vendor adoption of embedded AI, requiring prioritization and governance.

Across domains, leaders assumed that AI value is contingent on data quality, privacy safeguards, integration maturity, and clearly defined use cases.

Success Measures and KPIs

Leaders framed modernization expectations through outcome-oriented KPIs, treating performance metrics as verification outputs for cloud and AI investments.

- Operational metrics: service levels, ASA, abandonment, handle time, queue performance.

- Digital funnel metrics: online scheduling conversion, channel deflection, attribution accuracy.

- Engineering/financial metrics: automation rates, cost per contact, iteration speed.

- Experience metrics: CSAT, NPS, and effort scores.

Leaders emphasized that continued investment was contingent on demonstrable improvements, not theoretical gains.

Implementation Risks and Organizational Constraints

Several risks and constraints emerged across leadership roles:

- Data friction fragmented identity, inconsistent event tracking, weak interoperability, and fragile pipelines.

- Change management workforce readiness and operational adoption issues.

- Vendor overpromising misalignment between marketed features and organizational readiness.

- Governance security, identity, role-based access, and PHI-protected workflows.

Enablers included strong governance structures, reliable data pipelines, standardized intake processes, and clear architectural documentation which served as a stabilizing subsystem within modernization efforts.

Systems Engineering Mindset Across Leadership

Leaders demonstrated a systems-engineering orientation even when not stated explicitly. Common patterns included:

- Linking requirements to testing and operational outcomes

- Emphasizing cross-functional alignment

- Recognizing dependencies across cloud, data, workflow, and governance layers

- Finding the V-Model conceptually useful while preferring hybrid agile delivery

Overall, leaders conceptualized cloud modernization as an interdisciplinary systems effort requiring coordinated workflows, architectural traceability, and structured lifecycle management.

3.2 Executive-Level Systematic Review of Cloud Contact Center Literature

Digital Front Doors, Patient Access, and Cloud Contact Centers

Although the term “cloud contact center” is uncommon in academic literature, studies on digital access demonstrate improved convenience, equity, and outcomes when supported by well-defined workflows, particularly for rural and underserved populations (Ezeamii et al., 2024). Industry analyses likewise position digital access centers as strategic priorities for healthcare modernization (HIMSS, 2022).

Commercial platforms market their products designed to support HIPAA-regulated environments, including EHR integration, omnichannel routing, and embedded analytics. This suggests that technical foundations are mature, even as academic terminology continues to lag operational practice.

Key findings relative to leadership priorities:

- Confirms that cloud contact centers operate as enterprise access platforms rather than telephony upgrades.

- Reinforces leaders’ emphasis on omnichannel continuity and workflow integration.

- Validates that cloud platforms both produce and consume high-value event data.

AI, Conversational Agents, and Self-Service for Access

The strongest evidence base concerns conversational agents, chatbots, and LLM-based hybrid systems. Systematic reviews report positive usability and mixed effectiveness across education, navigation, and triage, alongside inconsistent safety evaluation and variable validation (Laranjo et al., 2018). More recent studies demonstrate improved engagement for scheduling and navigation (Clark et al., 2024), while also identifying risks such as hallucinations, privacy vulnerabilities, and integration complexity (Wah, 2025; Huo et al., 2025). Industry surveys further indicate higher clinician confidence in administrative AI applications than in clinical decision support (Coherent Solutions, 2025).

Key findings relative to leadership priorities:

- Strongly supports leaders’ focus on AI for routing, agent assist, and self-service.

- Confirms concerns related to data quality, privacy, governance, and integration.

- Highlights safety limitations, validating leadership emphasis on responsible AI oversight.

Automation, RPA, and Front-End Revenue Cycle

Evidence from revenue-cycle operations shows that RPA improves registration, eligibility checks, and verification processes. Industry case reports demonstrate reductions in errors, improved cycle times, and lower manual workload in rule-based workflows (R1, 2021).

Key findings relative to leadership priorities:

- Validates expectations that automation reduces variability and improves speed and accuracy.

- Reinforces the role of standardized workflows as prerequisites for automation success.

Data, Governance, Safety, and Systems-Level Themes

AI governance literature consistently identifies data quality, transparency, validation, and oversight structures as critical prerequisites (Al Kuwaiti, 2023). Research on conversational agents documents limited safety reporting and weak evaluation frameworks (Laranjo et al., 2018; Huo et al., 2025). Equity concerns remain significant, especially for digital access pathways (Ezeamii et al., 2024).

Industry guidance recommends formal governance models, steering committees, standardized intake processes, and enforceable controls (HIMSS, 2022).

Key findings relative to leadership priorities:

- Reinforces leaders’ emphasis on governance, identity management, data stewardship, and PHI-safe workflows.

- Validates concerns about data friction, measurement inconsistencies, and pipeline fragility.

Synthesis Relative to Leadership Perspectives

Across domains, the literature supports leadership perspectives that:

- Cloud and AI improve access and efficiency when integrated into structured workflows (HIMSS, 2022).

- Conversational agents are effective for administrative tasks but require careful governance (Laranjo et al., 2018).

- RPA improves accuracy and reduces manual work (R1, 2021; Patmon, 2023).

- Data governance is foundational for safe, scalable deployment (Al Kuwaiti, 2023).

However, the literature also highlights three critical research gaps that align with and extend leadership concerns:

Safety and Validation Gaps

Lack of rigorous evaluation for conversational agents, LLM tools, and automated decision support, with inconsistent metrics and limited clinical validation.

Measurement and Standards Gaps

Absence of consistent frameworks for assessing digital access outcomes, AI performance, or equity impacts across channels.

Workforce and Change-Management Gaps

Sparse evidence on how cloud modernization reshapes staffing, training needs, and human–AI task allocation, despite being repeatedly flagged by leaders as critical.

Overall Interpretation

Taken together, the evidence base reinforces the leadership insight that cloud contact center modernization is a systems-engineering and governance challenge rather than solely a technology acquisition. Studies affirm the importance of integrated workflows, high-quality data, strong governance, and structured implementation models, while also revealing gaps in safety evaluation, measurement rigor, and workforce transformation. These gaps remain unresolved in the current evidence base. Rather than reiterating technical features, the following sections focus on how these capabilities operate as organizational enablers across leadership domains.

4. Framework

4.1 Executive, Level Use Case Framework

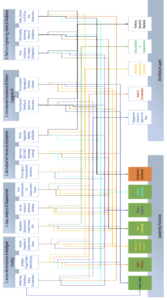

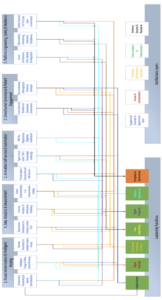

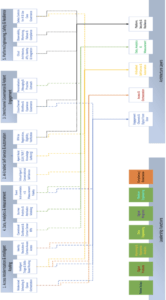

To demonstrate how leadership domains interact with the enterprise cloud contact center, this section introduces a simplified, executive-level use-case framework. While not a formal Unified Modeling Language (UML) diagram where diagram shows workflow of a system concenter (Kossiakoff et al. 2020, 234), the framework functions as a systems-level visualization that clarifies cross-functional dependencies, value flow across organizational subsystems, and alignment between core platform capabilities and enterprise outcomes. Its purpose is to provide a clear conceptual anchor for the use-case matrix in Section 5.3 and to frame modernization through an accessible systems-engineering lens.

The Mermaid use-case diagram was developed by translating six thematic domains into five architectural layers and aligning leadership-derived activities with formal system functions. Each use case was mapped backward to leadership themes and forward to architectural dependencies, ensuring traceability consistent with systems-engineering principles such as the V-Model (Kossiakoff et al. 2020, 30–38).

The framework functions as a high-level architectural map: each domain provides an input that becomes an output for downstream functions. In doing so, the diagram clarifies how the cloud contact center operates as a coordinated enterprise subsystem rather than a collection of independent projects.

4.2 Purpose of the Framework

Cloud contact center modernization represents an enterprise-wide transformation spanning technology, operations, analytics, and governance. Executives often encounter these initiatives: piecemeal telephony upgrades in one area, AI pilots in another, workflow redesign in others leading to fragmented interpretations of modernization. The framework in this section addresses that fragmentation by presenting an integrated view in which architecture, functions, and outcomes are organized as coordinated layers of a single digital access platform.

The model introduces five architectural layers, each corresponding to the leadership domains assessed in this study:

- Engagement Channels & Digital Front Door,

- Access & Orchestration,

- AI-Enabled Self-Service & Automation,

- Data, Analytics & Measurement, and

- Platform, Security & Resilience.

Each layer reflects the operational responsibilities of Patient Access, Digital Products, Experience Analytics, Data Engineering, Digital Programs, and Platform Engineering, illustrating where workflows intersect and where unified governance is required. This alignment clarifies which roles shape patient experience, orchestrate workflows, maintain data fidelity, and safeguard system resilience.

By consolidating twelve detailed use cases into four to five executive categories, the framework shows that use cases are not isolated features but multidimensional capabilities requiring coordination across architecture, operations, analytics, and governance. This approach reduces cognitive complexity while reinforcing modernization as a systems-engineering initiative rather than a technology procurement decision (Kossiakoff et al. 2020, 5–15, 95–115).

The model also serves as a reusable tool for prioritization, governance, and roadmap development, enabling executives to guide modernization with shared clarity and accountability.

4.3 Core Use Case Categories

To translate the complexity of cloud contact center modernization into an accessible executive construct, the twelve detailed use cases identified in this study were consolidated into five strategic clusters. These clusters mirror how leaders naturally conceptualize outcomes, capabilities, and cross-functional dependencies. Each cluster represents a system-level function that spans multiple domains and architectural layers.

- Access Modernization & Intelligent Routing

This cluster focuses on improving how patients reach the organization and how effectively the system directs them to the appropriate service.

Included use cases:

- Modern scheduling and queue orchestration

- Intelligent triage and skills-based routing

- Identity resolution and context-aware access

- AI-Enabled Self-Service & Automation

These use cases leverage automation to reduce friction, accelerate resolution, and streamline operational flow.

Included use cases:

- Virtual agents and conversational self-service

- Agent assist and intent detection

- RPA for registration, eligibility, and front-end verification

- Omnichannel Governance & Patient Engagement

This cluster ensures consistency, safety, and personalization across all digital and human channels.

Included use cases:

- Cross-channel governance and workflow standardization

- Personalization, messaging orchestration, and proactive outreach

- Data, Analytics, and Measurement

This cluster strengthen visibility, measurement fidelity, and data-driven decision making.

Included use cases:

- Real-time operational dashboards and KPI frameworks

- Journey analytics, attribution, and event instrumentation

- Platform Engineering, Safety, and Resilience

This cluster underpins all others by ensuring the platform is secure, scalable, compliant, and observable.

Included use cases:

- Cloud architecture, APIs, identity, and integration

- Safety controls, monitoring, and multi-region resiliency

The Use Case Diagram, presented as diagram code, is included in Appendix IV and provides a visual representation of these interactions through Microsoft Visio in Appendix V. That diagram reinforces the system-level concept that every use case requires coordination across multiple layers of architecture and governance, consistent with the systems-engineering orientation observed in leadership perspectives.

Table 2. The Use Case Matrix

| Executive Use Case Cluster | Detailed Use Case | Primary Functions Involved | Architecture Layers Touched |

| Access Modernization & Intelligent Routing | Modernized Scheduling + Queue Orchestration | Patient Access; Digital Products; Digital Programs; Experience Analytics; Platform Engineering | Engagement Channels → Access & Orchestration → AI Orchestration |

| Access Modernization & Intelligent Routing | Intelligent Triage + Skills-Based Routing | Patient Access; Digital Products; Experience Analytics; Platform Engineering | Engagement Channels → Access & Orchestration → AI Orchestration |

| Access Modernization & Intelligent Routing | Identity Resolution + Context-Aware Access | Digital Products; Digital Programs; Experience Analytics | Engagement Channels → Access & Orchestration |

| AI-Enabled Self-Service & Automation | Virtual Agent / Conversational Self-Service | Digital Products; Patient Access; Digital Programs; Platform Engineering | Access & Orchestration → AI/Automation → Platform Resilience |

| AI-Enabled Self-Service & Automation | Agent Assist (LLM, NLP, Knowledge Surfacing) | Digital Products; Patient Access; Data Engineering; Platform Engineering | Access & Orchestration → AI/Automation |

| AI-Enabled Self-Service & Automation | RPA for Registration, Eligibility, Verification | Data Engineering; Digital Programs; Platform Engineering | AI/Automation → Platform Resilience |

| Omnichannel Governance & Patient Engagement | Cross-Channel Workflow + Experience Governance | Digital Products; Patient Access; Digital Programs; Compliance/Governance | Engagement Channels → Governance Layer → Access Orchestration |

| Omnichannel Governance & Patient Engagement | Personalization, Messaging, Proactive Outreach | Digital Products; Experience Analytics; Patient Access; Digital Programs | Engagement Channels → Access Orchestration → Data & Analytics |

| Data, Analytics, & Measurement | Operational Dashboards, Contact Center KPIs | Marketing & Experience Analytics; Data Engineering; Patient Access | Data & Analytics → Measurement → Reporting/Insights |

| Data, Analytics, & Measurement | Journey Analytics + Attribution Modeling | Marketing & Experience Analytics; Data Engineering; Digital Products | Data & Analytics → Measurement |

| Data, Analytics, & Measurement | Event Instrumentation + Measurement Fidelity | Data Engineering; Digital Products; Marketing & Experience Analytics | Data & Analytics → Engagement Channels |

| Platform Engineering, Safety, & Resilience | Cloud Architecture, APIs, Identity, Integration | Platform Engineering; Data Engineering; Digital Programs | Platform Foundation → Security → Resilience |

| Platform Engineering, Safety, & Resilience | Observability, Monitoring, Failover, Compliance | Platform Engineering; Data Engineering; Compliance/Security | Platform Foundation → Resilience |

| Platform Engineering, Safety, & Resilience | Safety Controls for AI + Use Case Governance | Platform Engineering; Digital Products; Compliance/Governance | AI/Automation → Platform Security |

4.4 How Leaders Prioritize These Use Cases

Leader prioritization of cloud contact center use cases reflects each domain’s operational pressures, strategic mandates, and accountability for measurable outcomes. Although the five executive clusters form a unified systems-level framework, leaders emphasize different capabilities based on their subsystem vantage points. These differences create both productive tension and complementary strengths, which the framework reconciles by making underlying dependencies visible.

Patient Access leaders primarily prioritize Access Modernization & Intelligent Routing and AI-Enabled Self-Service. Their operational context is shaped by frontline pressures to reduce wait times, abandonment, and manual workload. Prioritization is outcome-driven, emphasizing service levels, staffing efficiency, and friction reduction at the digital front door. However, this focus may underweight long-term platform constraints or data governance prerequisites affecting scalability.

Digital Products leadership emphasizes Omnichannel Governance & Patient Engagement and AI orchestration. Their priorities reflect consumer expectations, digital strategy alignment, and ROI goals, with a focus on rapid iteration, personalization, and continuous optimization. These ambitions can strain integration readiness and data maturity, creating tension with Data Engineering and Platform Engineering teams responsible for foundational capabilities.

Marketing and Experience Analytics prioritize Data, Analytics & Measurement, emphasizing instrumentation fidelity, funnel visibility, attribution accuracy, and quantifiable experience improvements. Their effectiveness depends on upstream channel integration and consistent enterprise data definitions; limitations in interoperability or data reliability constrain full operationalization of measurement frameworks.

Data Engineering prioritizes use cases related to data readiness, AI enablement, and architectural extensibility. This foundational orientation emphasizes pipelines, identity models, event streams, and governance structures. While essential for safety and reliability, this approach may appear slower to leaders seeking rapid deployment of high-impact capabilities.

Digital Programs (CRM, telephony, messaging) focus on integration complexity, workflow standardization, and alignment with vendor ecosystems. Their prioritization reflects dependencies on third-party roadmaps, release cycles, and multi-team coordination, positioning this subsystem at the intersection of technical constraints and operational demand.

Platform Engineering prioritizes Platform, Safety & Resilience, including HIPAA controls, identity and access management, network architecture, observability, and failover design. By gating use cases based on risk posture, Platform Engineering introduces necessary constraints that may be perceived as barriers by experience-focused leaders but are critical to maintaining system integrity and preventing unsafe or unsustainable implementations.

Collectively, these perspectives reveal that prioritization is inherently multi-dimensional.

- Operational leaders emphasize speed and measurable improvement.

- Digital and analytics leaders emphasize orchestration, personalization, and insight generation.

- Engineering leaders emphasize readiness, reliability, and architectural integrity.

The use-case framework introduced in Section 4 clarifies where dependencies exist, where risks concentrate, and where sequencing matters. As a result, prioritization becomes a structured negotiation between ambition and feasibility which anchored in enterprise readiness, cross-functional governance, and shared accountability for outcomes (Kossiakoff et al. 2020, 17–29, 30–38).

5. Discussion

5.1 Integrating Leadership Perspectives and Evidence

Across leadership domains, a central insight emerges: the organizational conditions required for a cloud contact center to function as an enterprise access platform differ from the issues leaders emphasize in daily operational and strategic work. Leaders appropriately focus on outcome-oriented goals, reduced wait times, improved digital engagement, automation gains, and measurable experience improvements which align with evidence supporting digital front doors, AI augmentation, and virtual access models.

However, integrating these perspectives, with the literature, reveals a gap between desired outcomes and foundational readiness. While leaders articulate clear objectives, system success depends on underlying enablers: data quality, unified identity management, governance maturity, architectural resilience, and cross-channel instrumentation. Cloud and AI studies consistently show that modernization efforts fail not due to unclear goals but because prerequisites for safe, scalable, and coordinated deployment remain underdeveloped (Hu & Bai, 2014; Sachdeva et al., 2024; Stoumpos et al., 2023).

This tension of aspirational outcomes outpacing foundational readiness defines the current state of cloud contact center modernization. Leaders are directionally aligned with evidence, but sequencing introduces systemic risk. Sustainable value emerges only when outcome goals and foundational capabilities advance together: architectural integration, analytics readiness, governance alignment, and change-management capacity must progress in parallel for modernization to succeed.

5.2 The Cloud Contact Center as a Systems Engineering Problem

Findings from the leadership assessment and systematic review converge on the conclusion that cloud contact centers should be understood as complex socio-technical systems rather than discrete technology replacements. This framing aligns with systems-engineering literature emphasizing lifecycle alignment, requirements traceability, and the orchestration of components into a coordinated whole (Romero et al., 2016).

Cross-domain coordination is therefore intrinsic and not optional:

- Patient Access defines workflows and operational KPIs.

- Digital Products shape digital front-door experiences.

- Analytics ensures measurement fidelity and attribution clarity.

- Data Engineering maintains pipelines, models, and identity structures.

- Digital Programs integrate CRM, telephony, and messaging ecosystems.

- Platform Engineering safeguards architecture, interoperability, security, and resiliency.

No domain can independently drive modernization because each use case spans multiple architectural layers from engagement channels and orchestration logic to analytics, automation, and platform integrity.

The literature supports this systems-based interpretation. Research on cloud adoption identifies governance, data interoperability, workflow alignment, and architectural maturity not product choice as primary determinants of success (Meri et al., 2023). Evidence on AI agents shows that safety, validation, and integration which does not model sophistication are the limiting factors (Laranjo et al., 2018; Huo et al., 2025).

Explicit Systems Engineering Takeaway

The cloud contact center is a systems engineering problem because it:

- Requires coordinated design, implementation, and validation across domains, mirroring the V-Model lifecycle even when executed through agile methods (Kossiakoff et al. 2020, 30-38).

- Depends more on architecture, governance, and data readiness than on any individual technology, meaning organizational alignment and not a tool capability that determines performance (Kossiakoff et al. 2020, 95-115).

Thus, while technologies operate as enablers, enterprise value emerges only when the organizational system functions coherently.

5.3 Emerging Trends and Future Directions

In recent years, healthcare leaders have accelerated expectations for AI-enabled contact center systems, particularly capabilities such as real-time knowledge surfacing, automated call summarization, and intelligent next-best-action support. Like early-stage device integrations, these tools offer clear operational gains but require careful implementation. The literature consistently cautions that unvalidated conversational models can introduce safety risks that propagate through downstream clinical and operational workflows (Huo et al., 2025). As organizations move toward deploying AI agents in frontline settings, these systems increasingly resemble integrated subsystems: each machine-generated output becomes an input into clinical workflows and must therefore be verified for accuracy, interpretability, and consistency.

As conversational agents assume functions adjacent to triage, governance requirements expand. What was once general oversight begins to resemble medication safety or clinical decision-support governance, with layered review checkpoints and escalation pathways. Each component AI models, orchestration logic, and clinical rules must pass defined performance thresholds before influencing patient-facing decisions.

At the same time, the underlying infrastructure is evolving. Leaders increasingly prioritize cloud-native resiliency through multi-region failover, event-driven architectures, and elastic scaling. Seasonal surges and public health events have elevated resiliency from a technical consideration to a clinical access requirement. As with maintaining signal integrity across device, application, and network layers, every component must remain responsive under stress, and single points of failure must be mitigated.

Advances in identity resolution and instrumentation now enable real-time personalization and context-aware access. While these capabilities parallel those in consumer industries, healthcare introduces additional constraints related to consent, privacy, equity, and algorithmic bias that must be embedded within routing logic. Similar to hardware–software integration, AI-driven personalization requires shared specifications across engineering, compliance, and clinical leadership.

The emergence of AI copilots across engineering, analytics, and operations represents another shift, with the potential to augment rather than replace staff. However, long-term value depends on reproducibility, strong guardrails, and validated integration comparable to ensuring that informatics outputs are both efficient and clinically interpretable before advancing system maturity.

Across the literature, five persistent research gaps remain. Together, they represent the untested components of a broader ecosystem still in development:

- Safety and validation frameworks for AI and conversational agents

- Digital equity implications of omnichannel access

- Measurement standards for cloud contact center outcomes

- Comparative effectiveness of AI-enabled routing and automation

- Organizational science on workforce adaptation and trust

Progress in these areas will strengthen the evidence base for executive decision-making. Much like moving a prototype through electrical engineering, software engineering, clinical testing, and network validation, the field must iterate through cycles of evaluation, refinement, and re-testing. As gaps close and systems mature, organizations will gain the confidence needed to deploy AI-assisted contact center solutions that are not only efficient but also safe, equitable, and resilient.

6. Conclusion

Cloud contact centers represent a strategic shift in how healthcare delivers access, transforming the “front door” from siloed telephony into integrated, data-driven, omnichannel platforms. As demonstrated in this study, the cloud contact center now functions as an enterprise access hub that directly shapes patient experience, operational performance, and care equity. Its role extends beyond communication management to orchestrating workflows, data flows, and governance across organizational subsystems.

Based on leadership perspectives and the supporting evidence, five actionable insights emerge for healthcare executives considering cloud contact center modernization:

- Treat access as enterprise strategy, not IT infrastructure. Cloud contact centers shape patient experience, equity, and system capacity and must be governed accordingly.

- Sequence outcomes with readiness. KPIs, automation, and AI value materialize only when identity, data pipelines, and governance mature in parallel.

- Anchor modernization in systems engineering principles. Traceability between requirements, workflows, architecture, and validation reduces implementation risk.

- Prioritize governance as an enabler, not a constraint. Responsible AI oversight, data stewardship, and security controls accelerate sustainable adoption.

- Design for human–AI collaboration. Workforce readiness and change management are as critical as model performance or platform capability.

Together, these principles offer a practical decision framework for leaders seeking to modernize patient access while maintaining safety, equity, and operational resilience.

Findings from the executive-level systematic review reinforce this conclusion. Evidence supports the value of digital front doors, AI-enabled self-service, and cloud-based access centers, but benefits materialize consistently only when governance structures, data pipelines, and architectural patterns are aligned and actively maintained. Technology selection alone does not predict success; organizational readiness, cross-domain coordination, and disciplined lifecycle execution determine system performance.

Overall, this study contributes a leadership-informed, evidence-grounded framework that positions cloud contact center modernization as a systems-engineering challenge requiring coordinated integration across operations, digital experience, analytics, data engineering, and platform governance. Modernization succeeds when subsystems operate in alignment, requirements and workflows remain traceable across domains, and governance enables safe, scalable execution.

Looking ahead, AI-augmented access centers will evolve toward real-time personalization, hybrid human–AI collaboration, and healthcare-specific safety governance, expanding the strategic role of contact centers within the digital ecosystem. As these capabilities mature, health systems will increasingly rely on resilient architectures, unified identity models, and cross-channel measurement frameworks to support scalable and equitable access.

Future research should prioritize validated safety frameworks for conversational agents, equity implications of omnichannel access, standardized outcome measurement for cloud contact centers, and workforce adaptation to human–AI collaboration. Advancing these areas will strengthen the evidence base executives depend on as they navigate the next phase of access modernization.

Appendices

Appendix I – Interview Questions to Leadership Template

- Please state your title and company that you work for:

- Title:

- In a summary, please tell me about your obligations and responsibilities:

- In a summary, please tell me how your obligations and responsibilities tie to Artificial Intelligence, Machine Learning, Software, and Technology?

- In a summary, what are your expectations (KPIs, budget, performance, etc) that Artificial Intelligence, Machine Learning, Software, and/or Technology should deliver to you or your stakeholders?

- If you have examples or graphs, please share below:

- In summary, were your expectations met after implementation?

- Have you ever heard of Systems Engineering?

- If yes, please share below what kind of experience you had with systems engineering? If not, please tell me what you think system engineering consist of?

- Based on this V-Model and your first time viewing this image, do you feel like this is an advanced or intermediate or basic flow chart to follow?

Appendix II – Pre-AI and Post-AI Metrics Table

| Metric | Pre-AI (Baseline) | Post-AI (6 months) |

| Monthly inbound calls | 100,000 | 88,000 |

| % calls for simple tasks (FAQ, hours) | 35% | 20% |

| Average handle time (minutes) | 6.0 | 4.8 |

| Call abandonment rate | 12% | 8% |

| Online scheduling starts (web) | 10,000 | 14,000 |

| Completed online appointments | 7,000 | 10,500 |

| CSAT for digital interactions | 4.1 / 5 | 4.4 / 5 |

- Note: Changes may reflect combined effects of workflow redesign, staffing, seasonality, channel shift, and other non-AI interventions.

Appendix III – AI-Enabled DevOps Platform Engineering Questions & Model Answer Sample

This appendix provides example leadership-level questions and model answers for a Healthcare DevOps Platform Engineer supporting a Cloud Contact Center implementation. It is intended as illustrative reference material and may be tailored to organizational context.

- How do you ensure a Cloud Contact Center implementation aligns with healthcare regulatory requirements such as HIPAA?

Answer:

I start by establishing a compliance-by-design architecture. This includes enforcing encryption in transit and at rest, implementing strict IAM policies, using audit logging with immutable trails, and ensuring BAAs are in place with all cloud vendors. I work closely with Security, Compliance, and Legal to validate data flows, confirm that PHI boundaries are respected, and conduct periodic compliance reviews. Additionally, I integrate automated security scanning and policy enforcement in CI/CD pipelines to maintain continuous compliance.

- What leadership principles guide your approach to modernizing healthcare call center systems?

Answer:

My leadership philosophy is grounded in customer-centricity, transparency, and operational rigor. I encourage teams to focus on improving patient experience, not just infrastructure performance. I promote open communication, cross-functional collaboration, and data-driven decision-making. I also ensure teams have measurable objectives around reliability, security, and scalability to maintain clarity and accountability throughout the modernization journey.

- How do you balance innovation with risk mitigation in a healthcare DevOps environment?

Answer:

I use a progressive innovation approach pilots, blue/green deployments, and canary releases to test changes with minimal patient and agent impact. I advocate for strong observability practices and real-time telemetry to monitor risks proactively. Governance gates ensure we innovate responsibly, while retrospective reviews help refine our risk posture. This allows us to adopt new capabilities without compromising safety or regulatory obligations.

- What is your strategy for leading cross-functional teams during a Cloud Contact Center migration?

Answer:

I emphasize building a unified mission around patient experience improvement. My strategy is to create structured workstreams Architecture, Security, Data, Integrations, Agent Experience, and Testing each with clearly defined owners and success metrics. I facilitate weekly alignment meetings, enforce transparent status reporting, and ensure blockers are quickly escalated. Leadership engagement is key, so I maintain executive updates to keep everyone aligned and accountable.

- How do you measure the success of a Cloud Contact Center implementation?

Answer:

Success is measured using both technical KPIs and patient/agent experience metrics, such as:

- System availability (99.9%+ uptime)

- Contact routing accuracy

- Reduction in average handle time (AHT)

- Improvement in first-call resolution (FCR)

- Reduction in call abandonment rates

- Increased agent satisfaction scores

- Compliance audit performance

I socialize these KPIs with stakeholders and build dashboards to provide continuous visibility post-launch.

- How do you ensure scalability and high availability in a cloud-native contact center design?

Answer:

I design using multi-region failover, autoscaling groups, and distributed microservice patterns. For APIs and telephony components, I ensure redundancy at every layer data, compute, network, and application. Implementing health checks, circuit breakers, and event-driven architectures helps reduce single points of failure. I work with vendors like AWS, Azure, or Genesys Cloud to validate their HA architecture and align it with our resiliency standards.

- How do you approach integrating the Cloud Contact Center with EHR, CRM, and patient engagement platforms?

Answer:

My approach starts with defining standardized integration patterns API-first, HL7/FHIR compliance, and secure OAuth-based token flow. I work with clinical and business stakeholders to map data fields, define PHI boundaries, and ensure that context-aware routing enhances patient experience. I also promote reusable integration pipelines via CI/CD to reduce duplication and accelerate feature delivery.

- How do you manage change and stakeholder expectations during a large-scale contact center transformation?

Answer:

I create a structured change management plan that includes stakeholder mapping, regular communication cycles, pilot phases, and training sessions for agents and supervisors. I build feedback loops to capture concerns and adjust rollouts. Executives receive high-level dashboards while operational teams receive detailed readiness updates. The goal is consistent transparency and reducing uncertainty throughout the transformation.

- What role does observability play in maintaining a reliable healthcare contact center?

Answer:

Observability is foundational. I ensure the platform incorporates distributed tracing, log aggregation, telemetry dashboards, and real-time alerts. These capabilities allow us to detect anomalies early, pinpoint latency issues, and ensure quick incident resolution. Leadership benefits from having a single source of truth for system health, and engineering teams benefit from actionable insights that improve performance and reliability.

- How do you foster a culture of continuous improvement in DevOps teams supporting a Cloud Contact Center?

Answer:

I champion a blameless culture where issues become learning opportunities. I implement regular retrospectives, performance reviews of pipelines, and continuous refactoring sessions. I encourage experimentation through feature flags and sandbox environments. Leadership support helps embed a mindset of agility, accountability, and innovation ultimately enhancing the platform’s maturity and the value delivered to the business.

Appendix IV – Mermaid Architecture Diagram Code

This diagram is a conceptual mapping of architecture layers, leadership functions, and representative use cases for a healthcare access and contact center modernization program. It is an illustrative taxonomy not a prescribed vendor architecture.

%% Increase font size

%%{init: {

‘theme’: ‘default’,

‘themeVariables’: {

‘fontSize’: ’16px’,

‘lineHeight’: ’24px’

},

‘flowchart’: {

‘nodeSpacing’: 70,

‘rankSpacing’: 260

}

}}%%

graph TB

classDef cluster fill:#f7f7f7,stroke:#555,stroke-width:1px;

classDef usecase fill:#ffffff,stroke:#777,stroke-width:1px;

classDef layer fill:#e8f1ff,stroke:#3355aa,stroke-width:1px;

classDef func fill:#e9f7ef,stroke:#2e7d32,stroke-width:1px;

classDef gov fill:#fff3cd,stroke:#b8860b,stroke-width:1px;

%% ARCHITECTURE LAYERS

subgraph LAYERS[“Architecture Layers”]

direction TB

L1[“Engagement Channels & Digital Front Door”]

L2[“Access & Orchestration”]

L3[“AI-Enabled Self-Service & Automation”]

L4[“Data, Analytics & Measurement”]

L5[“Platform, Security & Resilience”]

end

class L1,L2,L3,L4,L5 layer;

%% FUNCTIONS

subgraph FUNCTIONS[“Leadership Functions”]

direction TB

F_PA[“Patient Access”]

F_DP[“Digital Products”]

F_AE[“Marketing & Experience Analytics”]

F_DE[“Data Engineering”]

F_DPR[“Digital Programs”]

F_PE[“Platform Engineering”]

F_GOV[“Compliance / Governance”]

end

class F_PA,F_DP,F_AE,F_DE,F_DPR,F_PE func;

class F_GOV gov;

%% CLUSTER 1

subgraph C1[“1. Access Modernization & Intelligent Routing”]

direction TB

UC1_1[“Modernized Scheduling & Queue Orchestration”]

UC1_2[“Intelligent Triage & Skills-Based Routing”]

UC1_3[“Identity Resolution & Context-Aware Access”]

end

class C1 cluster;

class UC1_1,UC1_2,UC1_3 usecase;

UC1_1 –> L1

UC1_1 –> L2

UC1_2 –> L1

UC1_2 –> L2

UC1_2 –> L3

UC1_3 –> L1

UC1_3 –> L2

UC1_1 –> F_PA

UC1_1 –> F_DP

UC1_1 –> F_DPR

UC1_1 –> F_PE

UC1_2 –> F_PA

UC1_2 –> F_DP

UC1_2 –> F_AE

UC1_2 –> F_PE

UC1_3 –> F_DP

UC1_3 –> F_DPR

UC1_3 –> F_AE

%% CLUSTER 2

subgraph C2[“2. AI-Enabled Self-Service & Automation”]

direction TB

UC2_1[“Virtual Agent / Conversational Self-Service”]

UC2_2[“Agent Assist (LLM / NLP / Knowledge Surfacing)”]

UC2_3[“RPA for Registration, Eligibility, Verification”]

end

class C2 cluster;

class UC2_1,UC2_2,UC2_3 usecase;

UC2_1 –> L2

UC2_1 –> L3

UC2_2 –> L2

UC2_2 –> L3

UC2_3 –> L3

UC2_3 –> L5

UC2_1 –> F_DP

UC2_1 –> F_PA

UC2_1 –> F_DPR

UC2_1 –> F_PE

UC2_2 –> F_DP

UC2_2 –> F_PA

UC2_2 –> F_DE

UC2_2 –> F_PE

UC2_3 –> F_DE

UC2_3 –> F_DPR

UC2_3 –> F_PE

%% CLUSTER 3

subgraph C3[“3. Omnichannel Governance & Patient Engagement”]

direction TB

UC3_1[“Cross-Channel Workflow & Experience Governance”]

UC3_2[“Personalization, Messaging & Proactive Outreach”]

end

class C3 cluster;

class UC3_1,UC3_2 usecase;

UC3_1 –> L1

UC3_1 –> L2

UC3_1 –> L5

UC3_2 –> L1

UC3_2 –> L2

UC3_2 –> L4

UC3_1 –> F_DP

UC3_1 –> F_PA

UC3_1 –> F_DPR

UC3_1 –> F_GOV

UC3_2 –> F_DP

UC3_2 –> F_AE

UC3_2 –> F_PA

UC3_2 –> F_DPR

%% CLUSTER 4

subgraph C4[“4. Data, Analytics & Measurement”]

direction TB

UC4_1[“Operational Dashboards & Contact Center KPIs”]

UC4_2[“Journey Analytics & Attribution Modeling”]

UC4_3[“Event Instrumentation & Measurement Fidelity”]

end

class C4 cluster;

class UC4_1,UC4_2,UC4_3 usecase;

UC4_1 –> L4

UC4_2 –> L4

UC4_3 –> L4

UC4_3 –> L1

UC4_1 –> F_AE

UC4_1 –> F_DE

UC4_1 –> F_PA

UC4_2 –> F_AE

UC4_2 –> F_DE

UC4_2 –> F_DP

UC4_3 –> F_DE

UC4_3 –> F_DP

UC4_3 –> F_AE

%% CLUSTER 5

subgraph C5[“5. Platform Engineering, Safety & Resilience”]

direction TB

UC5_1[“Cloud Architecture, APIs, Identity & Integration”]

UC5_2[“Observability, Monitoring, Failover & Compliance”]

UC5_3[“Safety Controls for AI & Use Case Governance”]

end

class C5 cluster;

class UC5_1,UC5_2,UC5_3 usecase;

UC5_1 –> L5

UC5_1 –> L2

UC5_2 –> L5

UC5_3 –> L3

UC5_3 –> L5

UC5_1 –> F_PE

UC5_1 –> F_DE

UC5_1 –> F_DPR

UC5_2 –> F_PE

UC5_2 –> F_DE

UC5_2 –> F_GOV

UC5_3 –> F_PE

UC5_3 –> F_DP

UC5_3 –> F_GOV

Appendix V – Visio Architecture Diagrams

Image I: Leadership Functions and Architecture Layers to Use Cases

Image II: Leadership Functions to Use Cases

Image III: Architecture Layers to Use Cases